System Design Tips for MediaConvert

In this post I'm going to share some tips on leading your AWS MediaConvert integration design with UX and SLA. I'll do this by telling you a story about a fictional social media platform, VidBuddies, and its fictional CEO (my cat Patches).

Meet my cat Patches. She is an incredibly talented engineer and entrepreneur. Patches is building a social media video platform called VidBuddies where users (mainly other cats) can contribute content and interact with each other (think TikTok, or YouTube Shorts).

One of the challenges of building a video-based platform for the web, let alone a video-first social media platform, is dealing with encoding. Patches wants to make sure that user-contributed videos load over the CDN quickly, while keeping bandwidth costs in check. So, she needs to encode those contributed videos into a nice delivery format. Users can upload all the MOV and ProRes video they want, but it's coming out the other end as HLS or DASH.

To encode/transcode videos, Patches needed, well, encoders.

Patches didn't want to manage FFMPEG and EC2 instances directly, she was already far too busy wrangling the rest of the platform and apps (who has the time to evaluate performance on different CPU architectures? It is not like we're Jan Ozer!), so she decided to use AWS MediaConvert; a well-regarded encode/transcode provider. All well and good, she was quite satisfied with the results when VidBuddies was new, and MAU was relatively low.

But something happened; a prominent, video-focused social media app called BipBop was suddenly banned in Patches' country, and a veritable army of new users started flooding Patches' app! At first Patches was overjoyed; so many new users! VidBuddies was blasting off to the moon! MediaConvert was happily chugging along, dealing with the mass of new user content coming in.

Two months had passed since BipBop was banned, and VidBuddies MAU count soared. VidBuddies new users had managed to upload three hundred and sixty thousand videos in that time, utterly dwarfing VidBuddies pre-BipBop-ban volume. And the MediaConvert bill for that time? A whopping $60,000. Other infrastructure bills had gone up too, like storage and CDN bandwidth, but Patches decided to tackle one thing at a time and focus on encoding.

The AWS Elemental suite is like any other AWS service: they provide all the capacity you could ever need, but it won't be free. Practitioners have to be disciplined and architecture has to be well-planned to avoid seeing all of their cash fly away towards Amazon's billing department.

Let’s see if Patches can keep VidBuddies afloat! Month three is starting, and Patches’s business bank account is looking pretty desperate.

The Reserved Queue

Patches took a look at her usage and realized something: "why am I offering such an aggressive encoding SLA to my users that don't even pay money to use this platform?"

A great question, Patches, a great question. MediaConvert's On-Demand Queue (ODQ) is a killer option when you need a video encoded as fast as possible. But do these freeloading users really need "as fast as possible"? Probably not.

User videos are getting out there fast, but it’s costing VidBuddies dearly.

Patches then decided to create a Reserved Queue (RQ) and purchased some reserved transcoding slots (RTS) for it.

What is a Reserved Queue?

A MediaConvert reserved queue is like a reservation, you buy monthly capacity (in the form of reserved transcode slots) at a fixed price for a year. This creates a new (region-specific) queue with fixed capacity in your AWS account.

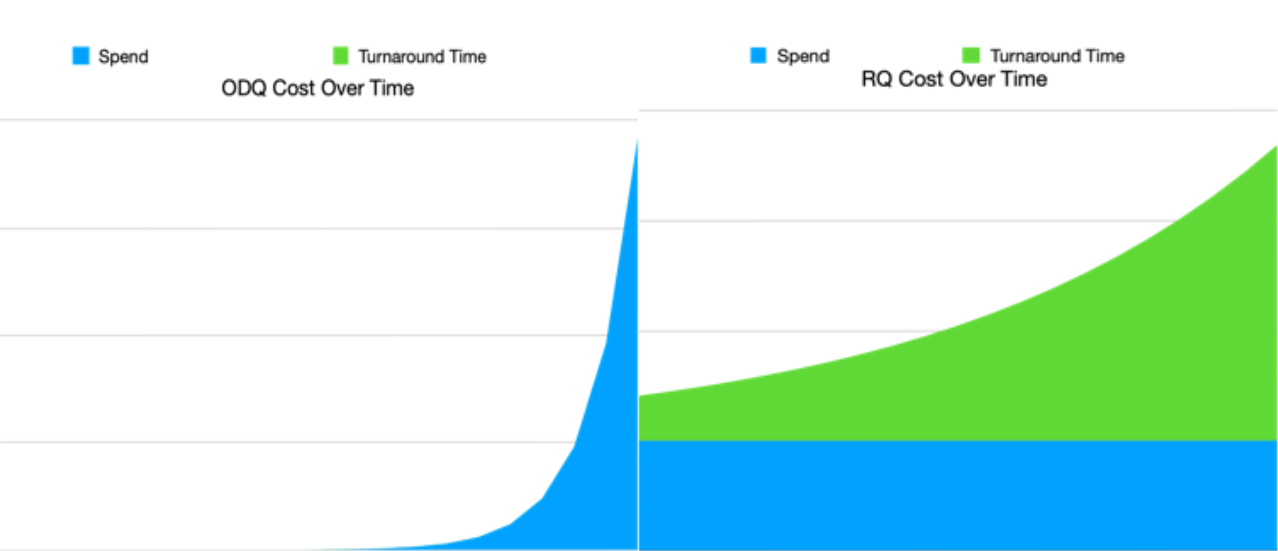

An RQ flips the MediaConvert billing model; with ODQ, you have two factors to worry about: volume and spend, where spend goes up with volume. With RQ, you now have 3 factors; volume, turnaround, and spend. Spend stays fixed, but now the new factor, turnaround, rises linearly with volume.

To use this queue, point to its ARN in your MediaConvert jobs:

{

...

"Queue": "arn:aws:mediaconvert:{REGION}:{ACCOUNT}:queues/vid-buddies-queue",

...

}

In general (assuming your encoding needs are consistent and high enough), a reserved queue can be cost-effective. Let us take VidBuddies, for example, with a four SD and two HD rendition AVC ladder, VidBuddies was producing 4.3 million output minutes per month (using MediaConvert basic tier).

At ODQ pricing this led to a staggering $ 30,000-per-month encoding bill.

Patches calculated the average user video was about 4 minutes long and took about 5 minutes to transcode. Her users were uploading about 180 thousand videos every month. She decided she was fine with a 15-minute turnaround time for her SLA (it is a little over three times the length of the average video... it should be fine, she thought). Based on this, she bought 9 RTS, capable of handling 25 simultaneous 5-minute jobs, with a 15-minute turnaround for each.

MediaConvert provides a simple calculator for estimating RTS needs, I recommend starting with this tool (in the AWS Console) for your own use case.

VidBuddies' MediaConvert bill dropped from $30,000 per month to $3,600; an 88% reduction. Patches celebrated her OpEx victory!

Maybe they do need a faster SLA?

Good grief, another problem?!

The users have been complaining that it takes too long to publish their videos. Patches knows why: the RQ is absolutely loaded to the brim and struggling to keep up. Patches solved her OpEx problem, but has created a UX problem as a consequence; and UX problems risk driving users away.

"Okay, maybe there is a way I can offer an okay SLA without spending too much?" Patches asked. A great question again, Patches!

Queue Hop

Patches activated MediaConvert's "queue hop" behavior on her RQ jobs. Basically, if a job waits for more than 1 minute to start, it would be sent over to the ODQ. Huzzah, the SLA is saved!

{

...

"HopDestinations": [

{

"WaitMinutes": 1,

// jump after 1 minute

"Queue": "arn:aws:mediaconvert:{REGION}:{ACCOUNT}:queues/Default",

"Priority": 0

}

]

}

But then the AWS bill came in... the new ODQ usage tacked on about $11,000 to the bill! Not as bad as before, but still not great. And users were signing up every day, so usage certainly won't be going down.

With the ODQ back in the mix, Patches' MediaConvert bill was rising once again. Even with the RQ taking on a decent chunk of encoding work, the sheer volume of video content being created was still overwhelming it and forcing too much work onto the ODQ. Patches needed to do something to get her transcoding spend in-control and predictable.

Patches could increase the RQ size, but what about idle time? Raising the RTS count to something like 20 would handle her capacity, costing $8,000 per month, but then she’d still be chasing capacity and vulnerable to sudden spikes in usage, just like now.

Patches was going to have to get creative to balance cost and SLA.

ODQ For Me, but not for Thee

Patches took a step back to think of the SLA more. What is the user really wanting here? Do they really want the entire HLS ladder encoded and ready for delivery? Do they even know what that means? Probably not.

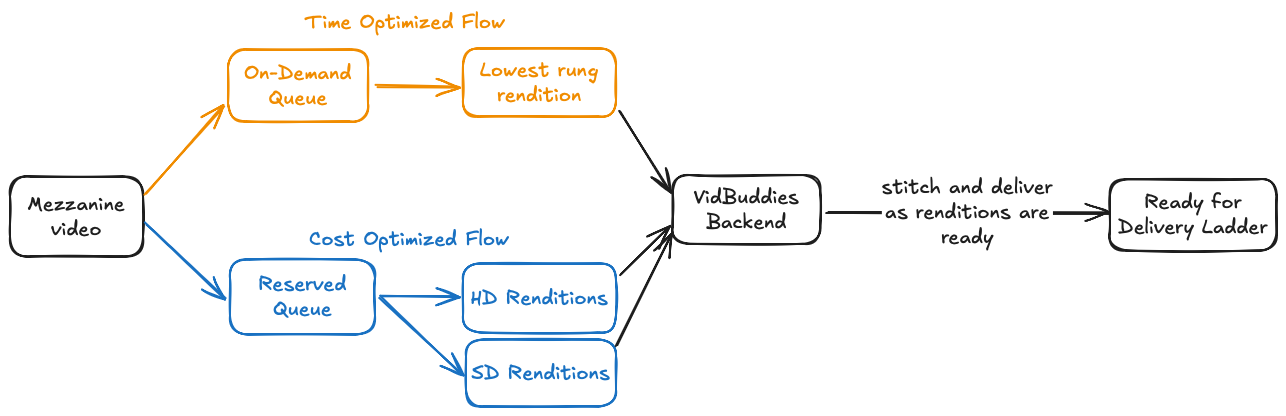

Is the hypothetical user simply happy if their video is published? What if Patches sent part of the work to the RQ, and another part to the ODQ? That way, the ODQ could get something out there quickly, while the RQ worked on the rest. She’d have to have her backend code cobble the outputs together and do some cache invalidation, but that didn’t seem overly complicated to her.

MediaConvert bills by "normalized minutes," with HD minutes being "heavier" than SD minutes; this basically boils down to HD content being more expensive to encode than SD. Patches decided to split the encoding workflow roughly along those lines.

There are four SD renditions and two HD renditions there. So Patches split her encoding workflow such that the very bottom SD rendition would get its own MediaConvert job: sent to the RQ at first, but with a hop time of 1 minute. The rest of the renditions would be sent strictly to the RQ with no hop time (or perhaps 5 to 10 minute hop time, depending on demand).

The VidBuddies' backend would then handle stitching the separate renditions together into a unified m3u8 (perhaps orchestrating the process with something like Temporal). The result was compelling: the tiny SD rendition would be ready to publish quite quickly, while not costing a significant amount to encode. From the user's perspective, their video was ready to publish, though it looked a little grainy at first. Then the RQ would work on finishing the rest of the ladder; and once all renditions were done, VidBuddies' backend put the ladder together and got it out to the CDN. The user noticed the video suddenly started looking a lot better, and all was right in the world.

UX considerations come into play again: "grainy at first." This is fine for Patches' use case but may be unacceptable for some platforms. It's also important to communicate encoding status to end-users, but not in a deep, technical way. Patches' simply notified the user "your video is ready to play, but we are still optimizing it."

A Note About Failover

MediaConvert is not immune to outages, though they are rare. MediaConvert uses regional endpoints like many other services, so a potential strategy for when your region's MC is unavailable is to transfer that work to another region. This will be at the cost of cross-region transfer, which can become costly given the typical size of videos.

Concluding

User complaints went down, and Patches' encoding bill stayed relatively low. VidBuddies OpEx was looking good, and new users were pouring in. Patches figured she'd probably need to keep tuning this balance; buying more RTS capacity as the user count climbed, but for now she was content with her MediaConvert bill.

She also planned out further tweaks, like dynamic decision-making for ODQ vs. RQ based on the user themselves: popular users would get aggressive SLAs on transcoding turnaround, while less popular users got the standard treatment.

Content with her transcodeing infrastructure state, she was now free to spend time optimizing her S3 and CloudFront bills, but that is perhaps the topic of a future post.

You Are Not a Cat

Coming back to reality, I want you, the reader, to ask yourself the following:

- What is my UX model for encoding and playback?

- What SLA am I comfortable with?

- What SLA are my users comfortable with?

Let these three questions guide the design of your MediaConvert integration. If you do decide you want to use a Reserved Queue, start with the following:

- Run some simulations to gauge turnaround time for your encoding workflows.

- Use the RTS calculator found on the Reserved Queue purchase page in the AWS Console.

- Reserved Queues are regional, and cross-region usage will incur transfer charges. It’s better to have an RQ per region (depending on your usage) than to send global work to a single region's RQ.

- There are other caveats to using a fixed-capacity system like the RQ; consider: if most of your video uploads happen on Saturday at 7pm, averages don't matter much. The RQ will not magically have more capacity just on Saturday. Keep seasonality of usage in mind when planning your RQ usage.